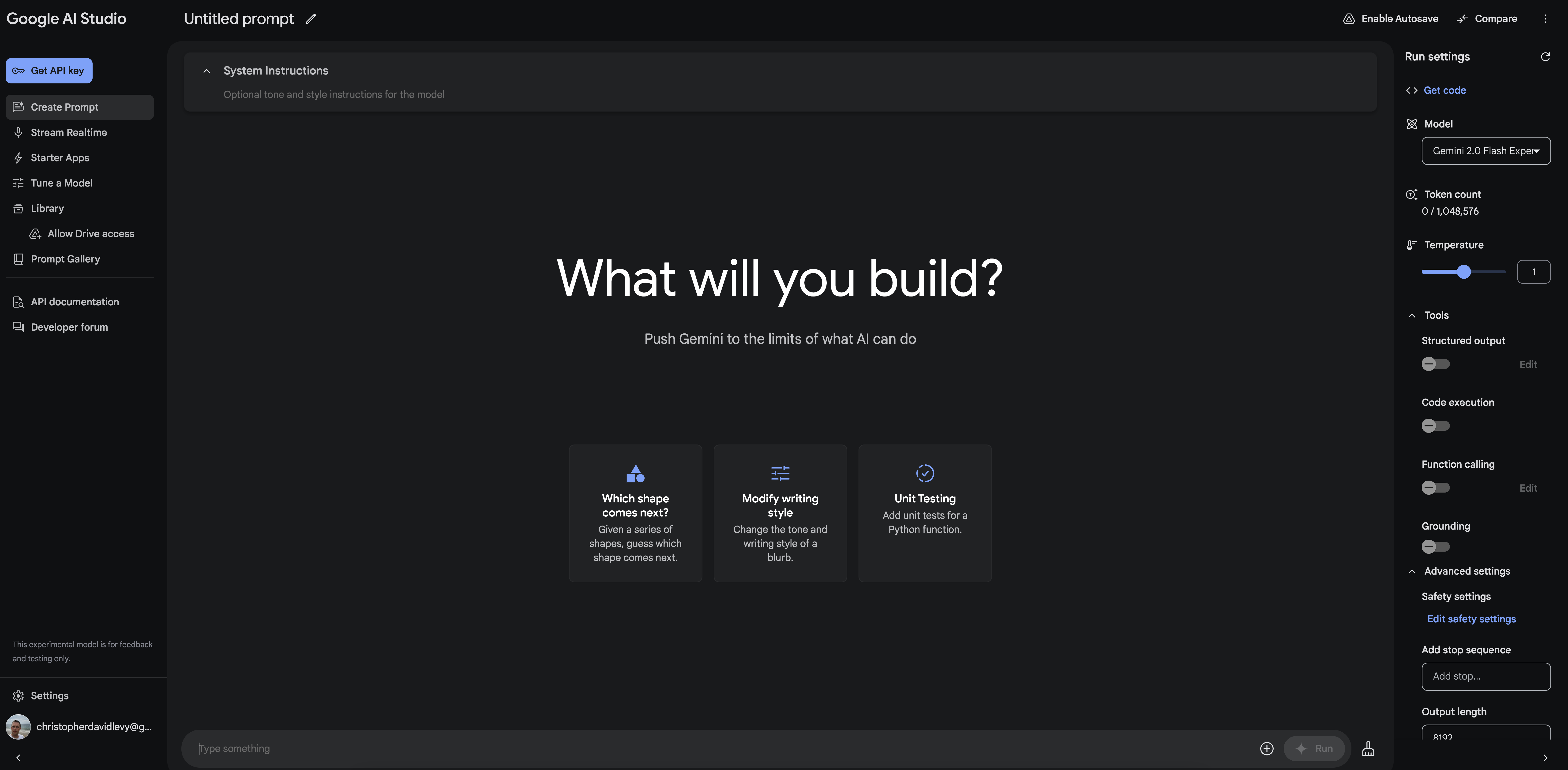

Code

from dotenv import load_dotenv

from IPython.display import Markdown

load_dotenv() # GOOGLE_API_KEY in .env

from google import genai

MODEL_ID = "gemini-2.0-flash-exp"

client = genai.Client()

response = client.models.generate_content(model=MODEL_ID, contents="Can you explain how LLMs work? Go into lots of detail.")

Markdown(response.text)Okay, let’s dive deep into the fascinating world of Large Language Models (LLMs). This is a complex topic, so we’ll break it down into digestible parts. We’ll cover the core concepts, the architecture, the training process, and some of the nuances that make these models so powerful and, sometimes, so perplexing.

What are Large Language Models (LLMs)?

At their heart, LLMs are sophisticated computer programs designed to understand and generate human-like text. They are large because they have a massive number of parameters (the internal settings that determine their behavior) and they are language models because their primary function is to model the patterns and relationships within language.

Here’s a more detailed breakdown:

Statistical Nature: LLMs don’t “understand” language in the way humans do. Instead, they operate on statistics and probabilities. They learn the likelihood of words and phrases appearing in sequences, given the context. Think of it like predicting the next word in a sentence based on what you’ve already read. They build up a complex web of associations between words, allowing them to generate coherent and contextually relevant text.

Neural Networks: LLMs are built upon artificial neural networks, a type of machine learning algorithm inspired by the structure of the human brain. These networks consist of interconnected layers of nodes (neurons) that process information. The connections between these nodes have adjustable weights, which are the “parameters” of the model. Learning happens by adjusting these weights to minimize prediction errors.

Transformers: Most modern LLMs use a specific type of neural network architecture called a Transformer. This architecture is particularly well-suited for processing sequential data like text. We’ll explore transformers in more detail later.

Key Components of an LLM:

- Tokenization: Before text can be fed into an LLM, it needs to be broken down into smaller units called tokens. These tokens can be individual words, parts of words (subwords), or even characters. For example, the word “unbelievable” might be tokenized into “un”, “be”, “liev”, “able”. Tokenization helps the model handle complex words and out-of-vocabulary (OOV) words.

- Embedding: Once tokenized, each token is converted into a numerical representation called an embedding. Embeddings capture the semantic meaning of the token, meaning that tokens with similar meanings will have similar embeddings. This allows the model to understand relationships between words.

- Transformer Architecture: The core of most LLMs. This architecture consists of several interconnected components, most notably:

- Encoder: Processes the input sequence (e.g., a question or prompt) and creates a contextualized representation of the input.

- Decoder: Uses the encoder’s representation and generates the output sequence (e.g., an answer or continuation of the text).

- Attention Mechanism: Allows the model to focus on the most relevant parts of the input sequence when generating the output. It learns which words are important for understanding the current word being processed. This is the heart of the Transformer’s ability to handle long-range dependencies in text.

- Feedforward Networks (FFNs): These are simple neural networks applied to each token’s representation individually after the attention layer. FFNs add non-linearity and increase the capacity of the model.

- Layer Normalization: Normalizes the outputs of each layer to improve training stability and prevent vanishing gradients.

- Output Layer: The final layer that maps the hidden representation of the text to a probability distribution over the vocabulary of all possible tokens. The token with the highest probability is chosen as the predicted next token.

How Transformers Work in Detail

The attention mechanism is crucial, so let’s break it down further:

- Queries, Keys, and Values: Each token is transformed into three vectors:

- Query (Q): What the token is “asking” for.

- Key (K): What the token is “offering”.

- Value (V): The actual content of the token.

- Attention Weights: The attention mechanism computes attention weights by taking the dot product of the query vector with all the key vectors in the input sequence. These dot products are then scaled and passed through a softmax function to normalize them into probabilities. Higher weights indicate more relevant tokens.

- Weighted Sum of Values: The attention weights are used to take a weighted sum of the value vectors. This sum represents the contextualized representation of the current token, taking into account its relationships with other tokens.

- Multi-Headed Attention: Transformers typically employ multi-headed attention, meaning they perform this attention calculation multiple times using different sets of query, key, and value transformations. This allows the model to capture different kinds of relationships between words.

The Training Process: From Randomness to Language Mastery

LLMs are trained through a computationally intensive process called pre-training followed by fine-tuning.

- Pre-Training:

- Massive Data: LLMs are trained on vast datasets of text, typically scraped from the internet (e.g., books, web pages, code repositories). This process is often called unsupervised learning, as there are no labels for what is correct, and the model discovers patterns through self-supervised learning.

- Next-Word Prediction: The pre-training objective is usually next-word prediction. The model is given a sequence of words and is trained to predict the next word in the sequence. This seemingly simple task is powerful enough for the model to learn intricate patterns of language structure, syntax, and even some world knowledge.

- Adjusting Parameters: The model’s parameters (the weights and biases of the neural network) are adjusted through a process called backpropagation. During backpropagation, the difference between the model’s prediction and the actual next word is calculated, and this “error” signal is used to update the parameters in the direction that reduces the error.

- Computational Resources: Pre-training requires enormous computational resources, including powerful GPUs and large amounts of time. It’s a massive undertaking.

- Fine-Tuning:

- Task-Specific Data: Once pre-trained, the LLM can be fine-tuned on a smaller, task-specific dataset. For example, you might fine-tune a pre-trained model on a dataset of questions and answers for use in a chatbot, or on a dataset of labeled text for sentiment analysis.

- Supervised Learning: Fine-tuning uses supervised learning methods, meaning that the data includes both input and the desired output, which allows the model to learn specific tasks.

- Adapting to New Tasks: Fine-tuning allows LLMs to adapt their general language skills to perform specific tasks. For example, an LLM fine-tuned on a dialogue dataset will be better at generating conversational responses than the same model in its pre-trained state.

- Instruction Following: Fine-tuning can also be done on instruction following datasets, which allow LLMs to better understand human instructions and respond accordingly. This is crucial for using them effectively.

Key Nuances and Considerations

- Context Window: LLMs have a limited “context window,” meaning they can only process a certain number of tokens at a time. This limitation can be a challenge when dealing with long texts or conversations.

- Bias and Fairness: LLMs are trained on data that may contain societal biases, which can be reflected in their output. Researchers are working to mitigate bias in LLMs.

- Hallucination: LLMs are known to “hallucinate,” meaning they can generate outputs that are factually incorrect or nonsensical. This is partly because they are trained to be fluent and coherent, rather than factually correct.

- Interpretability: Understanding why an LLM makes a certain prediction is often challenging. These are often seen as “black boxes” because of their complexity.

- Continual Development: The field of LLMs is rapidly evolving, with new architectures and techniques being developed constantly.

In Summary

LLMs are incredibly powerful tools that have revolutionized the field of natural language processing. They work by statistically modeling language through massive neural networks, particularly the Transformer architecture. They learn from vast datasets through pre-training and can then be fine-tuned for specific tasks.

However, it’s important to remember they are based on statistics, not understanding, and they have their limitations and potential biases. They are an exciting technology, but one we must use responsibly and with an awareness of their capabilities and shortcomings.

This explanation is extensive, but the field is constantly evolving. If you have more specific questions, feel free to ask! I’d be happy to elaborate on any particular aspect.